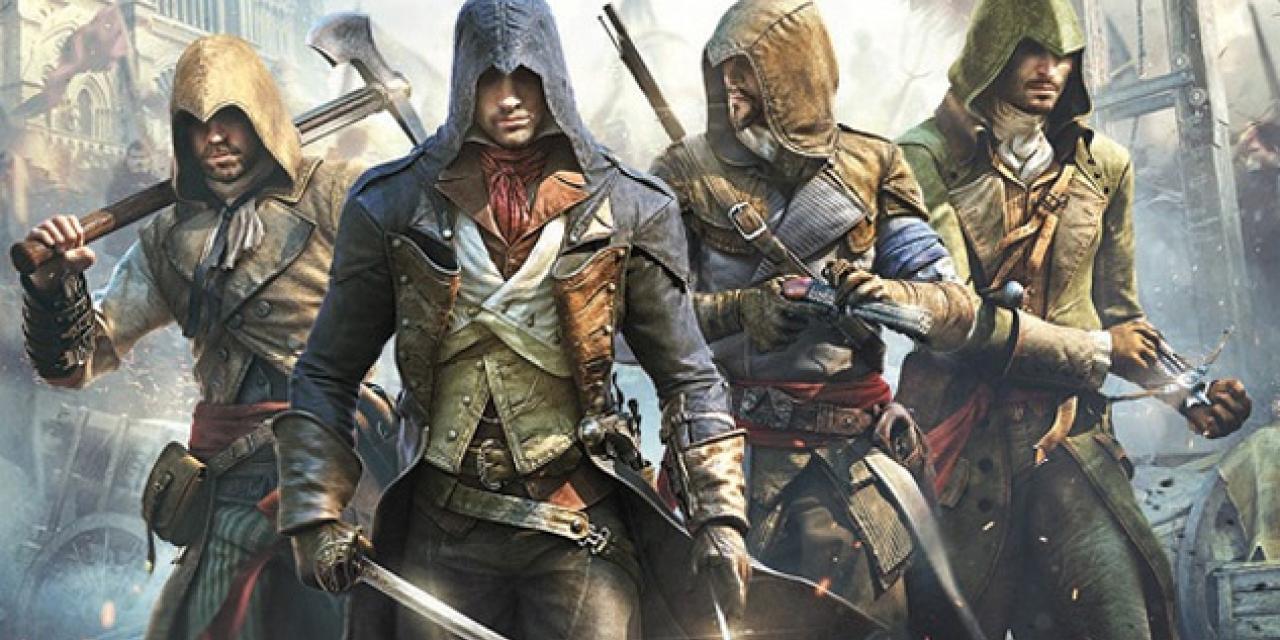

There's been a lot of talk as of late, about games like Evil Within and Assassin's Creed Unity, all surrounding the fact that these games aren't able to render 1080p resolutions, or render anything at all it seems, over 30 frames per second.

NB. Since the writing of this piece, Evil Within has been patched to allow 60 FPS on the PC.

While that sort of frame rate is fine if you're making a movie, it's practically unheard of for PC gamers and yet the developers are trying to spin it like it's an intended and improved feature. Something that makes the game more "filmic."

To add insult to injury, both games had particularly high recommended and minimum specifications on PCs, leaving people wondering just why they need to have a GPU that costs over $400 alone, to play a game at just 30 frames per second, when it struggles to be rendered correctly on a console that costs the same in its entirety?

Of course a lot of this is just gamers getting angry for the sake of it. We do like doing that after all, partly because we're passionate about gaming and partly because it is intrinsically linked with the internet, which is itself a beast of raw emotion and typos.

So how much does all this "resolutiongate" nonsense actually matter? Do we need games rendered at a certain resolution and do they need to hit a certain frame rate? Why can't this latest generation of consoles seem to handle anything more than that and why are gaming PCs being specced out of playing these games?

That's a lot of questions, but I'll try to answer all of them. We'll go in reverse order, from the practical, to the more ethereal.

Why are the specifications so high for these games?

Most of it, is to do with video memory. In a desktop PC, your GPU has its own, separate memory, most likely GDDR5, a storage medium with far more bandwidth than anything your system memory can handle. It probably has a gigabyte or more if it's from one of the recent generations, while your mainboard has at least 4GB, probably upwards 8GB in reality.

The latest consoles on the other hand (PS4 and Xbox One), come with 8GB of system memory, but the difference is they can share it. Sharing that memory between the CPU and GPU means that effectively, these consoles have 6GB+ of video memory to work with. Since your desktop can't share its system memory, you're only able to compete in that respect if you have a $1,000+ GPU.

That's not the only reason, but it's the main one. These new games have plenty of video memory to work with, so textures are absolutely huge. It's also the reason the install sizes for these games just took a giant leap over the last generation. That and bigger internal hard drives.

Unfortunately though, the reason these consoles are struggling to hit the mark when it comes to resolution and frame rate, is because they're just quite underpowered in other ways. While the CPUs are slightly shackled, the GPUs are the biggest culprits. The Xbox One's GPU outputs 1.31 teraflops of raw power, whilst a mid range desktop GPU from the last gen can do more than twice that.

The PS4's can do 1.84 teraflops, partly because it uses GDDR5 for its shareable system memory, compared to the Xbox One's GDDR3, but that's hardly pushing the boundaries of graphical performance.

To give you a desktop comparison, the Xbox One is fitted with a tweaked version of the HD 7790 chip, whilst the PS4 is fitted with something more akin to the HD 7870.

Those are hardly contemporary power houses and it's these limitations, which are the main reason for this generation struggling to hit 1080p and a high frame rate (among others, but I'll get to that in a minute). It's certainly not because 30 frames per second is better than 60 FPS. It's just not. It works for films, because they have motion blur, but games are about more that something looking smooth, they're about something feeling smooth and that can only happen at decent frame rates.

It's marketing nonsense to try and cover up the fact that this generation's consoles simply aren't physically capable of doing what PCs have been doing for years and the last-gen consoles managed at a lower visual fidelity.

That said, this generation of games, while obviously a little clunkier than their predecessors, are very pretty, despite the lack of 1080p. Now of course people are up in arms about this, but 1080p isn't some golden standard. For PC gamers it's long been surpassed as the holy grail resolution, but just because those with standard HD monitors still use it, doesn't mean it's a necessity.

Yea it can do away with the need for anti-aliasing and it prevents monitor blurring due to not using the native resolution, but there are a lot of other effects I'd like to see before a game is rendered at 1080p. I'm sure you'd agree too.

Look back at a game from 10 years ago, like Half life 2. It was a great looking game at the time, but today it's lighting is stark and unnatural, everything looks flat and character models are blocky. Tell me which you would rather, tessellation at 900P, or just a simple high resolution version?

Advanced volumetric lighting, sub-surface scattering on character models, at 800P, or a 1080p rendering of the original game.

Chances are, you'd go for the former in each of those scenarios. Point being, there are things much more important than resolution. It's like engine power. There's a reason F1 cars don't use 20litre engines, because it's more efficient and produces better results at a lower size, with some tuning. The same goes for resolution.

So ultimately, frame rates are important, even if resolution isn't, but why do so many care about it?

Because we've been taught to. This is entirely the fault of developers, hardware manufacturers and retailers. They've spent the last 10 years telling us to buy 1080p monitors and TVs, talking up games that could handle 720p and then eventually 1080p resolutions. HD text on games like Dead Rising meant those using SD TVs couldn't read any quest information and old games are constantly being re-released in "HD" reimasterings.

Buy HD they said. HD is important they said.

And now apparently it's not. We knew that all along really, but it's no wonder that it will take some time to remember, as we've been sold on the idea for years.

Frame rate is important though. Stop trying to trick us guys. We know better.